AI Testing Services

AI Testing Services / AI-Powered Testing Services

Software Releases with Flawless, Automated Testing

As software becomes increasingly complex and frequent updates are rolled out, traditional testing methods often fall short. Manual testing is slow, error-prone, and usually misses critical edge cases. This leads to delays, performance issues, and bugs in production, all of which impact user experience and necessitate costly rework.

Our testing services are designed to address these challenges head-on. We automate the testing process, ensuring that your software is continuously validated with every update, so nothing is overlooked. By automatically generating test cases, running them across multiple environments, and catching potential issues early, we give you the confidence that your product will always perform as expected.

We automate repetitive testing using tools like Testim, Selenium, and Pytest, such as regression checks and integration testing. This allows for faster testing cycles and ensures comprehensive coverage of your software, even as it grows. We also apply self-healing tests that automatically adapt to UI changes or new features, minimizing the need for manual intervention.

The result? Faster release cycles, fewer bugs, and reduced downtime—all while keeping testing costs in check. You’ll have the peace of mind knowing that every part of your application is thoroughly tested before it reaches your users.

Let us take testing off your plate so you can focus on scaling and improving your software.

The Impact:

80%

Automates regression testing

50%

Cuts testing time

30%

Reduces debugging time

Download The Master Guide For Building Delightful, Sticky Apps In 2025.

Build your app like a PRO. Nail everything from that first lightbulb moment to the first million.

Derisk Your Software Systems

AI offers powerful benefits but also introduces significant risks. Our AI-powered software testing services help you identify and resolve issues early, reducing testing time by 50% and increasing reliability.

Manual test case creation is time-consuming and prone to errors. Missing edge cases or inconsistent coverage can result in critical bugs, delays, and costly fixes. As software becomes more complex, manual testing slows development and compromises quality.

Our Automated Test Generation service ensures thorough testing with minimal effort. We use Testim for dynamic test case generation and Applitools for visual validation, covering both functionality and UI. These tools test across environments, helping catch issues early, reduce testing time, and prevent bugs from reaching production.

As your software evolves, tests adapt automatically, keeping your software fully tested. The result? Faster release cycles, higher-quality software, and reduced costs.

Example: When a new feature is added, our automated system instantly generates relevant test cases, saving hours of manual work. This ensures the feature functions as expected and eliminates the risk of missed issues, enabling faster, more confident releases.

Changes in your application—whether UI updates or new features—can often break test cases, causing delays and inconsistent testing results. Manual updates to test scripts every time there’s a change can lead to errors and wasted resources.

We utilize Testim and Mabl for self-healing automation, which automatically adapts to changes in the application without manual intervention. These tools detect updates in your software—such as UI modifications or new features—and update the test scripts accordingly, ensuring the tests remain valid and accurate.

This automation guarantees that your tests keep pace with ongoing changes, preventing test failures and minimizing downtime. Your development team no longer needs to spend time fixing broken tests, allowing them to focus on delivering new features.

Example: When a button label changes, the self-healing system immediately updates the test script to accommodate this change, preventing test failures and ensuring uninterrupted testing cycles without delays or errors.

AI-powered software can unintentionally introduce bias, leading to unfair outcomes, errors, and poor user experiences. Without effective testing, these biases can go undetected, undermining your software’s reliability and trustworthiness.

We use AI-driven testing tools to automatically generate test cases that identify and address any bias or fairness issues. These tools simulate a wide range of real-world scenarios, ensuring that your software behaves consistently and fairly for all users, regardless of their background or data inputs.

By proactively addressing bias in your software, we help ensure that your system complies with regulatory standards and builds trust with your users, all while improving software quality and performance.

Example: When you update your recruitment software, our testing system will automatically check for any biases in the candidate selection process. If the system starts favoring one demographic over another, the tests will flag this issue, allowing you to fix it before it impacts the user experience. This ensures that your hiring process remains fair, transparent, and free from unintended bias, keeping your software reliable and ethical.

As AI models are integrated into software, they can often behave like “black boxes,” where users cannot easily understand how decisions are made. This lack of transparency can cause confusion, mistrust, and even compliance issues.

To ensure your software’s AI components are transparent and understandable, we use explainability techniques like LIME and SHAP. These tools provide clear insights into how your software’s AI makes decisions by breaking down complex models and highlighting the importance of different features. This ensures that users can trust your software, knowing exactly how outcomes are derived.

Example: When your software rejects a loan application, explainability testing ensures that the user can clearly see which factors—like credit score or income—led to the decision, fostering trust and reducing user frustration.

AI-driven software can be vulnerable to adversarial attacks, where malicious inputs manipulate AI models into making wrong predictions. These attacks can undermine the security and performance of your software, leading to customer distrust.

We simulate real-world adversarial attacks on your software to identify vulnerabilities and weaknesses in the system. Using tools like CleverHans, Burp Suite, and OWASP ZAP, we ensure that your AI-powered software can withstand manipulation. We also conduct stress tests with Apache JMeter to confirm that your software performs reliably under heavy user load and remains secure against potential attacks.

Example: If your software uses facial recognition to grant access, our testing will simulate attacks like photoshopped images to identify and fix vulnerabilities. This ensures your software accurately distinguishes between real and altered images, preventing unauthorized access and protecting sensitive information.

Integrating AI models into your software development process requires continuous testing to ensure consistent quality. Without regular validation, bugs or performance issues can appear after deployment, negatively impacting user experience and functionality.

We integrate AI-powered testing directly into your DevOps pipeline using tools like Jenkins and GitLab CI. This ensures that every change made to your software is automatically tested, enabling rapid feedback and quick identification of potential issues. Continuous testing minimizes the risk of errors in production, ensuring a smoother and faster development cycle.

Example: When your e-commerce site updates its AI-powered product recommendation engine, continuous testing ensures that the new recommendations are accurate and relevant, improving customer experience and preventing any errors or downtime after the update.

Still Relying on Manual Testing?

Build AI That Stands the Test of Time. Start AI Testing Today

Preventing Misdiagnoses, Fraud, and Bias with AI Testing

| Industry | How AI Testing Benefits Your Business |

| Healthcare | AI testing guarantees that diagnostic tools and medical software make accurate predictions, minimizing the risk of misdiagnoses. It also ensures sensitive patient data is protected, helping you meet regulatory compliance and deliver safer, more reliable care. |

| Fintech | With AI testing, we help detect fraud early and ensure risk management systems work as intended. Automated testing improves the security and trustworthiness of financial applications, ensuring that they meet both customer expectations and regulatory standards. |

| Retail | AI-powered testing enhances inventory management systems, ensuring accurate predictions and efficient stock management. Additionally, it validates personalized customer recommendations and experiences, ensuring fairness while protecting customer data. |

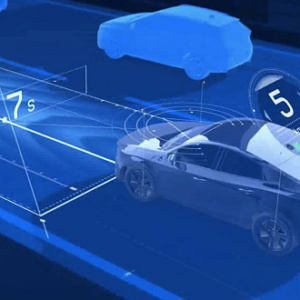

| Transportation | AI testing ensures the safety and reliability of self-driving systems and logistics software. By validating AI-driven algorithms, we help reduce operational errors, optimize routes, and enhance overall safety in transportation systems. |

| Education | AI testing guarantees that educational platforms offer fair, unbiased learning tools. It ensures AI systems provide equal opportunities for all students while protecting privacy, delivering effective, personalized learning experiences for diverse needs. |

Minimizing AI Failures, Maximizing Business Impact

We focus on ensuring accuracy, fairness, and compliance, helping your AI systems perform reliably while minimizing risks and operational disruptions.

In this initial phase, we conduct a collaborative design thinking workshop with your team to thoroughly understand the specific testing requirements of your software. We focus on your business goals, software complexity, and user expectations. This workshop helps us identify potential risk areas—such as data integrity, system performance, or compliance—and define clear quality objectives.

The outcome is a tailored, business-focused testing strategy that aligns with your performance, reliability, and security standards, ensuring a smoother, more efficient testing process.

Next, we perform a comprehensive audit of the data and models used in your software. We assess data accuracy, consistency, and potential biases, ensuring that the datasets driving your AI are high-quality and reflective of real-world conditions.

Our team uses advanced validation techniques to verify data integrity and evaluate the performance of AI models. The outcome is a robust, fair, and reliable AI system that not only meets performance standards but also aligns with your business objectives and regulatory requirements.

We combine automated testing with expert manual reviews to uncover both obvious and subtle software issues. This comprehensive approach ensures that your software is fully tested and works seamlessly across all environments. The outcome: efficient, high-quality software that functions flawlessly in real-world conditions.

To ensure transparency and build trust, we validate the decision-making processes within your software, ensuring stakeholders understand how and why your software behaves a certain way, guaranteeing regulatory compliance. The result: a transparent, trustworthy software solution that meets both internal and external expectations.

We simulate potential security breaches and adversarial attacks, proactively identifying vulnerabilities in your software. This ensures your software is robust and secure, mitigating risks before they escalate. The outcome: a secure software system that is resilient against security threats.

We integrate testing into your continuous integration and delivery pipeline, allowing real-time testing of every software update. This proactive approach ensures that issues are caught early in the development process, preventing delays and disruptions. The result: faster, more efficient software development with minimal risk.

We use collaborative platforms to track bugs, gather feedback, and implement continuous improvements. This ensures quick resolutions and ongoing optimization of your software. The outcome: a software solution that evolves to meet your business needs and consistently delivers top-tier performance.

Post-deployment, we provide continuous monitoring to detect issues early and ensure compliance with industry regulations. Regular audits keep your software aligned with changing standards and market needs. The result: long-term, reliable software performance that drives success.

Tech Stack: Tools Powering Reliable AI Testing

| Category | Tools and Technologies |

| Data Quality Validation | Great Expectations, Apache Deequ |

| Model Testing Frameworks | TensorFlow Extended (TFX), MLflow |

| Bias & Fairness Analysis | IBM AI Fairness 360, Fairlearn |

| Explainability Tools | LIME, SHAP |

| Security Testing | CleverHans, Penetration Testing Tools |

| Automation Frameworks | Selenium, pytest |

| CI/CD Integration | Jenkins, GitLab CI, CircleCI |

| Documentation & Compliance | Confluence, RiskWatch, AuditBoard |

What to expect

What to expect working with us.

We transform companies!

Codewave is an award-winning company that transforms businesses by generating ideas, building products, and accelerating growth.

Frequently asked questions

In AI-powered software testing, we use specialized tools to validate data quality, ensuring the input data for AI models is clean, accurate, and consistent. We assess issues such as missing data, inconsistencies, and data drift to ensure that AI systems are trained on reliable datasets, minimizing potential errors and performance gaps.

We use frameworks tailored for AI-powered software testing, such as TensorFlow, PyTorch, and other model evaluation tools that integrate with testing environments. These frameworks enable us to automate testing of AI-driven applications, ensuring their algorithms and models are functioning optimally across diverse scenarios.

In AI-powered software testing, we focus on detecting and addressing biases in AI-driven decisions. We evaluate the model’s behavior across different user groups and input scenarios, ensuring fairness by identifying and correcting any potential biases that could affect the model’s performance or lead to unjust outcomes.

AI-powered software testing involves analyzing the inner workings of AI algorithms to make the decision-making process transparent. We focus on ensuring that stakeholders can understand how models arrive at decisions, providing clear explanations of AI predictions and actions. This helps build trust and meets compliance requirements for transparency.

Security testing for AI-powered software involves identifying vulnerabilities in the AI system’s code, data, and algorithms. We simulate potential threats, including adversarial attacks, to ensure the integrity of the AI models and prevent exploitation or manipulation of the AI-powered features in real-world applications.

Automation frameworks like Selenium, pytest, and Cypress are employed to streamline testing for AI-powered software. These frameworks help automate repetitive testing tasks, such as regression tests, ensuring that new AI-driven features integrate seamlessly with existing systems and continue to perform reliably.

CI/CD tools are integrated into AI-powered software testing workflows to automate testing as part of the continuous integration pipeline. This ensures that as new changes are made to the software or AI models, automated tests are executed to validate functionality, performance, and stability, helping maintain high-quality standards throughout development.

In AI-powered software testing, we maintain thorough documentation of all testing processes, results, and methodologies. This includes ensuring compliance with industry regulations, such as GDPR and CCPA, to verify that the AI models and software adhere to data privacy standards and other regulatory requirements.

To ensure reproducibility and traceability, we implement version control systems for both the AI models and the testing environment. This allows us to track changes, manage updates, and ensure that the AI-powered software can be reproduced or audited reliably when needed.

Yes, AI-powered software is rigorously tested for compliance with data privacy laws like GDPR and CCPA. During testing, we assess how the software handles user data, ensuring that AI models are designed to protect personal information and comply with all relevant data protection regulations.

Scalability testing for AI models involves simulating heavy traffic and user interactions to ensure the AI-powered software can handle large volumes of data and users. We test the AI’s ability to scale effectively in production environments while maintaining consistent performance and reliability under stress.

After deployment, we implement continuous monitoring for AI-powered software to track the performance of AI models in real-time. We identify issues such as data drift or performance degradation, making necessary adjustments to ensure the AI system remains aligned with business goals and provides accurate results over time.